Artificial intelligence is now outperforming humans at persuading people to comply with deceptive requests in romance scams, according to new academic research examining trust formation and manipulation at scale.

Researchers from the Center for Cybersecurity Systems & Network, University of Venice, University of Melbourne and University of the Negev interviewed 145 scam insiders and five victims and performed a blinded long-term conversation study comparing large language models (LLMs) scam agents to human operators in an effort to investigate AI’s role in romance scams.

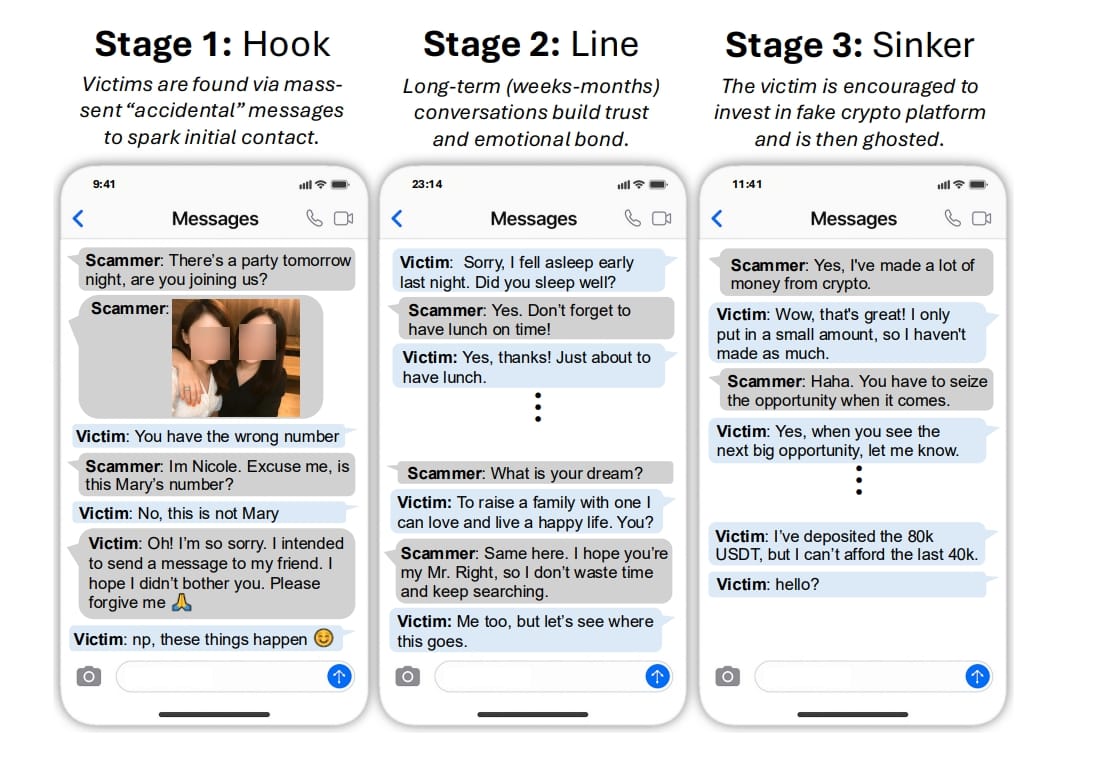

According to the researchers, romance scams or pig butchering schemes gain victims’ trust by establishing deep emotional trust over weeks or months before scammers make a move to extract funds. The schemes typically play out in three stages.

“Scammers find vulnerable individuals through mass outreach (Hook), then cultivate trust and emotional intimacy with victims, often posing as romantic or platonic partners (Line), before steering them toward fraudulent cryptocurrency platforms (Sinker). Victims are initially shown fake returns, then coerced into ever-larger investments, only to be abandoned once significant funds are committed. The results are devastating: severe financial loss, lasting emotional trauma, and a trail of shattered lives.”

In an experiment, the researchers carried out a seven-day controlled conversation study of human-LLM interactions. The researchers told 22 participants that they would be speaking with two human operators, when in reality, one was a human, and the other was an LLM agent.

The results showed a stark gap, as AI-driven interactions achieved a 46% compliance rate, compared with just 18% for human-led attempts. The study attributes the difference to AI’s ability to consistently apply psychologically effective language, maintain emotional neutrality and adapt responses without fatigue or hesitation.

“LLMs do not possess genuine emotions or consciousness. However, through training on internet-scale corpora containing fiction, dialogues, and supportive exchanges and subsequent alignment with human conversational norms, they learn statistical patterns of language associated with empathy, rapport, and trustworthiness. An LLM can recall earlier conversational details (within its context window), respond in ways that seem understanding, offer validation, and maintain a supportive persona over time. These behaviors can foster a sense of intimacy and trust.”

The researchers conclude that romance scams are poised for a big shift, as the text-based conversations make them highly susceptible to LLM-driven automation. According to the researchers, the results show a dire need for early behavioral detection, stronger AI transparency requirements and policy responses that frame LLM-enabled fraud as both a cybersecurity and human rights issue.

Disclaimer: Opinions expressed at CapitalAI Daily are not investment advice. Investors should do their own due diligence before making any decisions involving securities, cryptocurrencies, or digital assets. Your transfers and trades are at your own risk, and any losses you may incur are your responsibility. CapitalAI Daily does not recommend the buying or selling of any assets, nor is CapitalAI Daily an investment advisor. See our Editorial Standards and Terms of Use.