Google is lifting the curtain on the training breakthroughs that propelled Gemini 3 into the front of the AI model race.

Google’s Deep Learning lead and Gemini co-head, Oriol Vinyals, says in a post on X that the jump from version 2.5 to 3.0 is one of the biggest performance gains it has ever witnessed.

He says the key was not a clever trick or a new architecture, but a major improvement in the way the model was trained before and after its main learning cycle, challenging the assumption that scaling was ending.

According to Vinyals, Google found better ways to train the model and discovered methods that unlocked more intelligence to climb scaling walls that frustrated other firms.

“The secret behind Gemini 3?

Simple: Improving pre-training & post-training.

Pre-training: Contrary to the popular belief that scaling is over…the team delivered a drastic jump. The delta between 2.5 and 3.0 is as big as we’ve ever seen. No walls in sight!

Post-training: Still a total greenfield. There’s lots of room for algorithmic progress and improvement, and 3.0 hasn’t been an exception.”

Gemini 3 is a single architecture model that was built from the ground up to seamlessly process and understand text, images, video, audio, and code within a single, unified architecture. The structure enables the model to perform deep cross-modal reasoning that allows it to read a medical scan and write a diagnosis plan, analyze a sports video and break down a strategy or turn a sketch into a working interface without switching models.

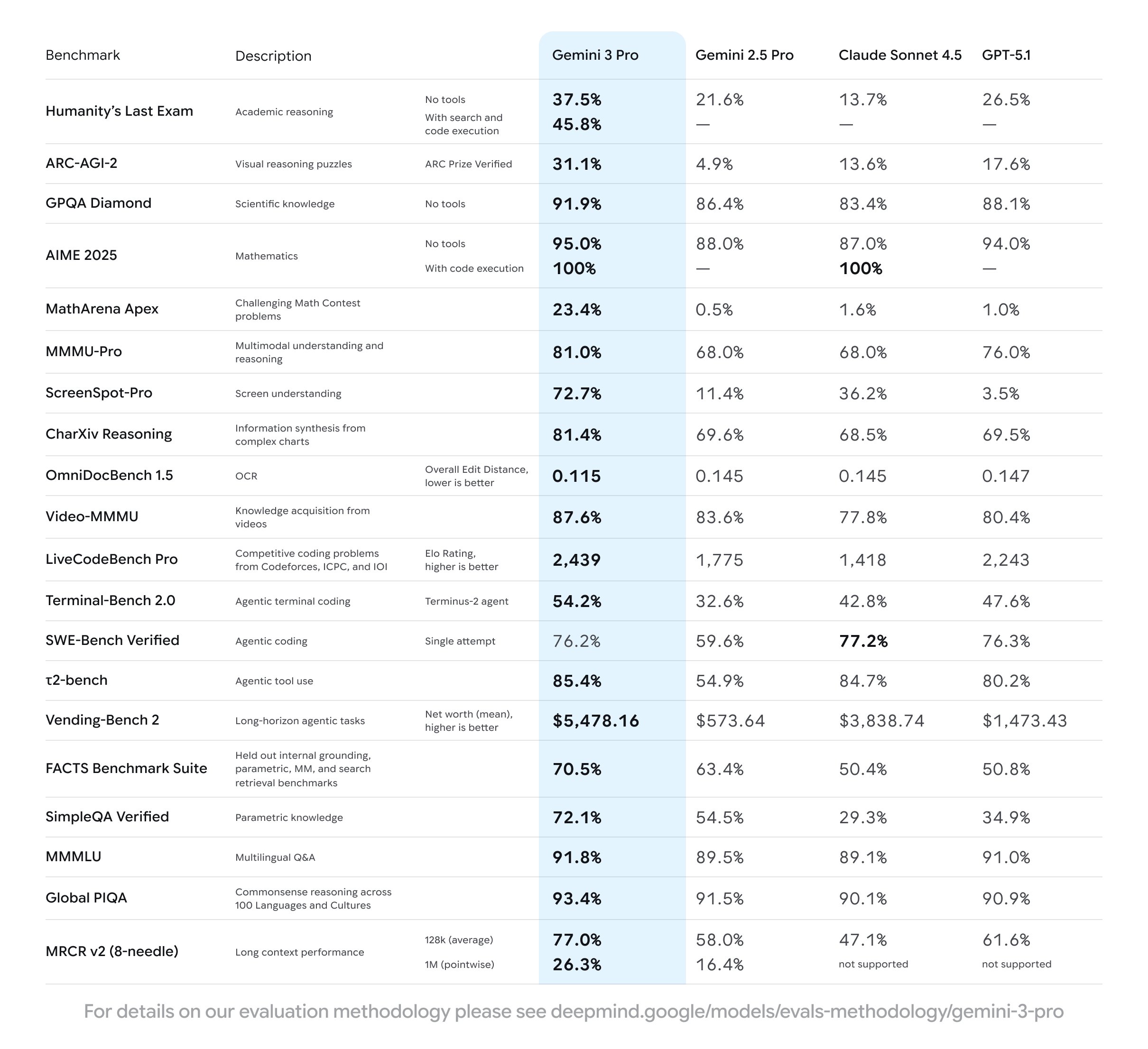

Google says that these abilities also strengthen planning tasks. Gemini 3 has posted new highs on research benchmarks that grade scientific reasoning, complex logic problems, and advanced multi-step analysis. The tech giant adds that Deep Think mode is now in an experimental phase. The feature allows an enhanced reasoning mode that can use “parallel thinking” to dramatically improve results on the most demanding analytical tasks, pushing the frontier of complex problem-solving.

Google is pitching the upgrade as a shift toward more general-purpose intelligence. By treating every form of data as part of one system rather than separate pipelines, engineers believe Gemini 3 can scale more efficiently as new capabilities are added.

On Sunday, reports emerged that Sam Altman told OpenAI staff that “vibes out there will be rough” after Google solved major pre-training pain points.

Disclaimer: Opinions expressed at CapitalAI Daily are not investment advice. Investors should do their own due diligence before making any decisions involving securities, cryptocurrencies, or digital assets. Your transfers and trades are at your own risk, and any losses you may incur are your responsibility. CapitalAI Daily does not recommend the buying or selling of any assets, nor is CapitalAI Daily an investment advisor. See our Editorial Standards and Terms of Use.