Americans remain cautious about trusting AI-generated search results, particularly when it comes to news and financial information, according to new data from Statista.

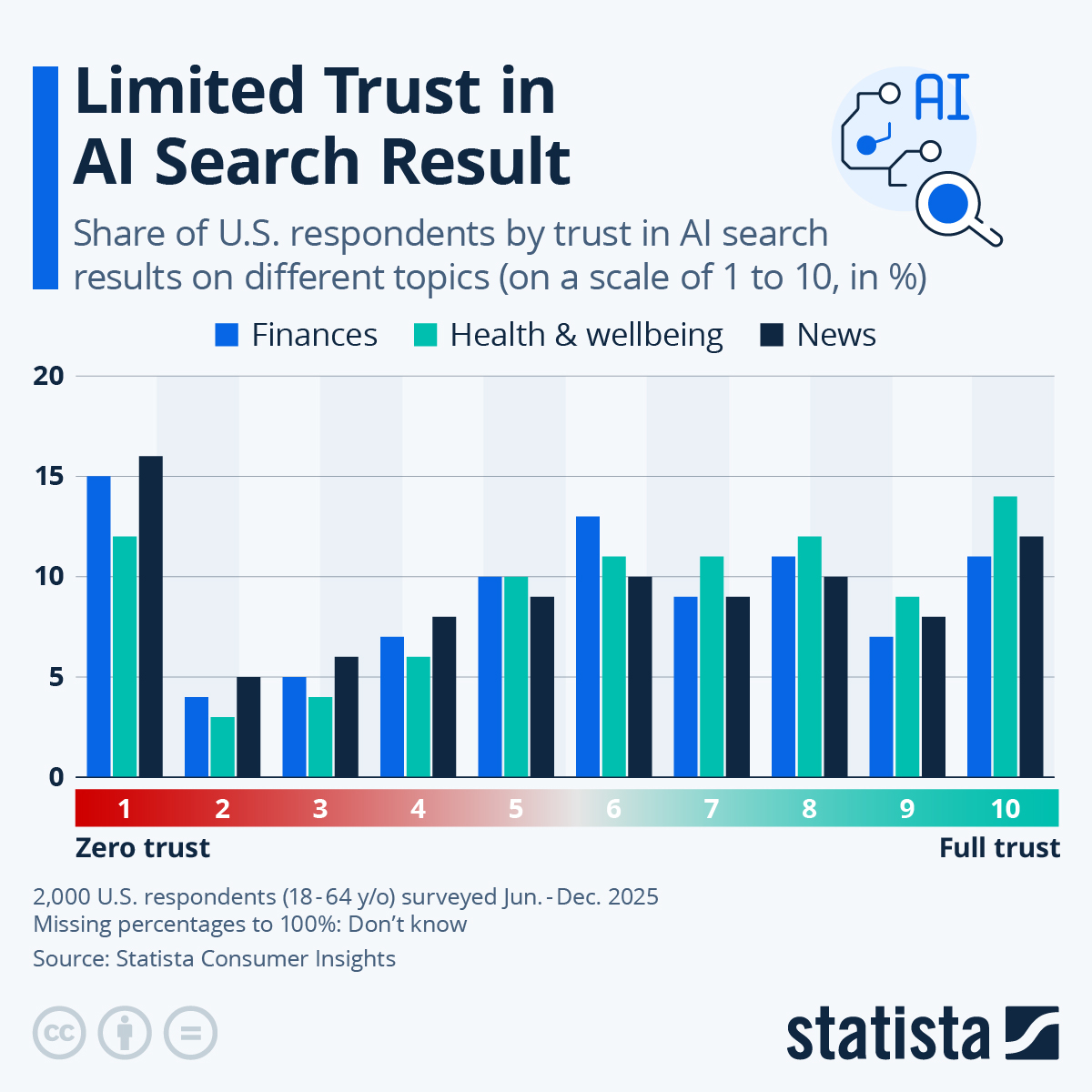

A Statista Consumer Insights survey of 2,000 US respondents ages 18 to 64, conducted between June and December 2025, found that only about one-third of Americans report a high level of trust in AI search results, defined as their trust ratings of eight to ten on a ten-point scale.

The findings come as AI-generated answers are increasingly surfaced at the top of search engine results, meaning even users who do not actively use tools like ChatGPT, Gemini or Perplexity are regularly exposed to large language model outputs.

Statista says roughly 25% of respondents expressed low trust in AI search results, giving ratings between one and three, while close to 40% fell in the middle, indicating moderate trust. The results suggest many users are aware that AI outputs can be wrong, but may not consistently double-check answers, especially when pressed for time or when results align with expectations.

Statista also says trust varied by topic. Health and well-being searches received the highest confidence, with about 35% of respondents indicating high trust and only 19% reporting low trust. Finance and news ranked lower, with about 29% expressing high trust in financial results and roughly 27% saying they had little trust in AI-generated news coverage.

The data suggests that while AI search is becoming unavoidable in everyday online activity, skepticism remains entrenched in areas where accuracy and credibility are perceived as most critical.

Disclaimer: Opinions expressed at CapitalAI Daily are not investment advice. Investors should do their own due diligence before making any decisions involving securities, cryptocurrencies, or digital assets. Your transfers and trades are at your own risk, and any losses you may incur are your responsibility. CapitalAI Daily does not recommend the buying or selling of any assets, nor is CapitalAI Daily an investment advisor. See our Editorial Standards and Terms of Use.