Stanford researchers say the world’s most powerful AI labs are becoming less transparent about how their systems are built, tested and deployed, raising fresh questions about whether the industry can be trusted to police itself as foundation models scale across the economy.

The findings come from a new Foundation Model Transparency Index released by researchers from Stanford, Berkeley, Princeton and MIT.

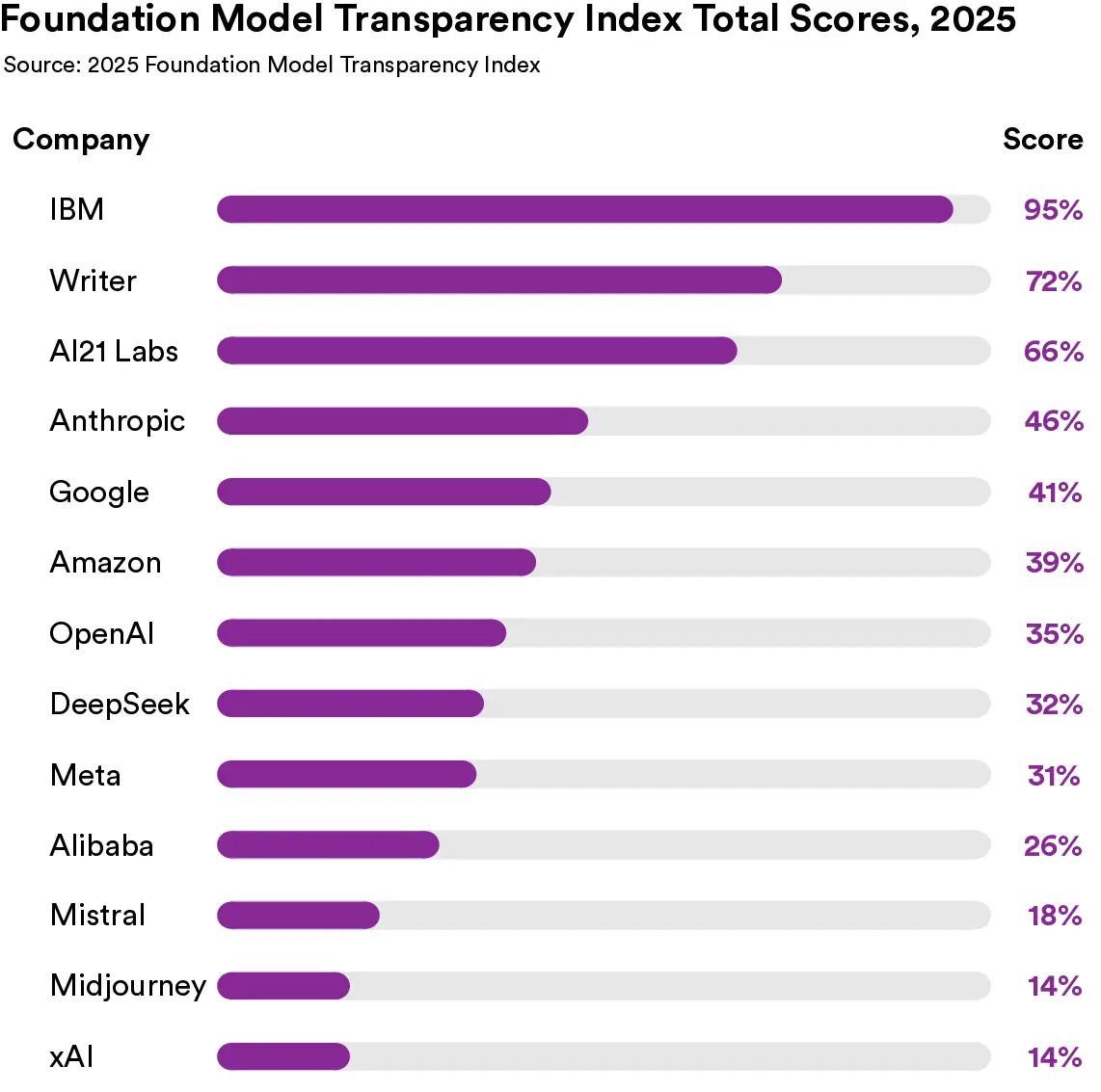

The 2025 edition scores 13 major AI companies across factors, where each firm is scored on a 100-point scale in topics such as training data, risk mitigation and economic impact.

“The Foundation Model Transparency Index clarifies the complex terrain of the current information ecosystem. The entire industry is systemically opaque about four critical topics: training data, training compute, how models are used, and the resulting impact on society.”

The researchers find that transparency in the industry fell from 58 out of 100 in 2024 to just 40 in 2025. And transparency now varies widely depending on the company. IBM scored 95, the highest in the index’s history, while xAI and Midjourney posted 14, among the lowest scores ever recorded. Meta and OpenAI, meanwhile, saw some of the steepest declines. Meta dropped from 60 to 31 after releasing Llama 4 without a technical report, and OpenAI now sits near the bottom among companies scored in all three years.

The researchers also warn against assuming that open-weight models are inherently more transparent. They note that several open developers score well, but others, including Meta, DeepSeek and Alibaba, provide little insight into the practices behind their releases.

“Openness does not guarantee transparency. While some open model developers are very transparent, others are quite opaque. Caution should be exercised in assuming that a developer’s choice to release open model weights will confer broader transparency about company practices or societal impact.”

The team closes by emphasizing that transparency is now determined almost entirely by corporate choice rather than shared industry norms. They say the situation creates clear opportunities for policy intervention, especially around the opaque areas that have remained unchanged for three years.

“The Foundation Model Transparency Index can serve as a beacon for policymakers by identifying both the current information state of the AI industry as well as which areas are more resistant to improvement over time absent policy.”

Disclaimer: Opinions expressed at CapitalAI Daily are not investment advice. Investors should do their own due diligence before making any decisions involving securities, cryptocurrencies, or digital assets. Your transfers and trades are at your own risk, and any losses you may incur are your responsibility. CapitalAI Daily does not recommend the buying or selling of any assets, nor is CapitalAI Daily an investment advisor. See our Editorial Standards and Terms of Use.