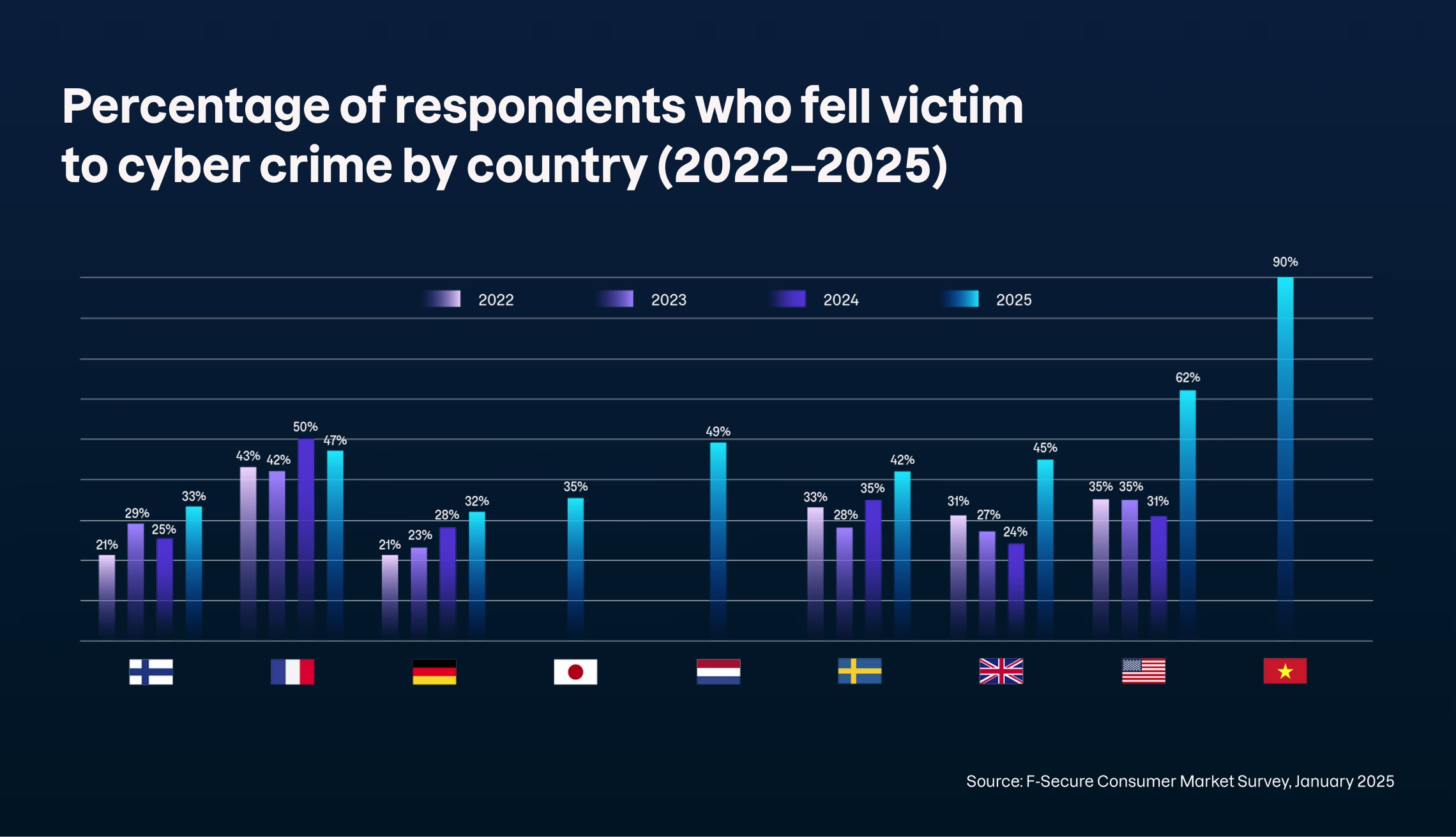

The number of Americans who fell victim to cybercrime this year jumped 100% as scammers increasingly use AI to deceive victims, according to new research from Finnish cybersecurity firm F-Secure.

The company’s 2025 Scam Intelligence & Impacts Report reveals that the rate of Americans victimized by online crime jumped from 31% in 2024 to 62% this year.

The US stands behind Vietnam in cybercrime rates, with 9 in 10 residents of the Asian nation reporting being victimized by thieves online.

F-Secure researchers also warn that AI tools have transformed the economics of online fraud, allowing attackers to automate deception at scale and mimic the tone and identity of real people in seconds.

Dr. Megan Squire, threat intelligence researcher at F-Secure, says the result is a wave of scams spreading faster and hitting victims harder than ever before.

“This year, AI is fueling a new wave of scams—helping threat actors scale faster and appear more convincing.”

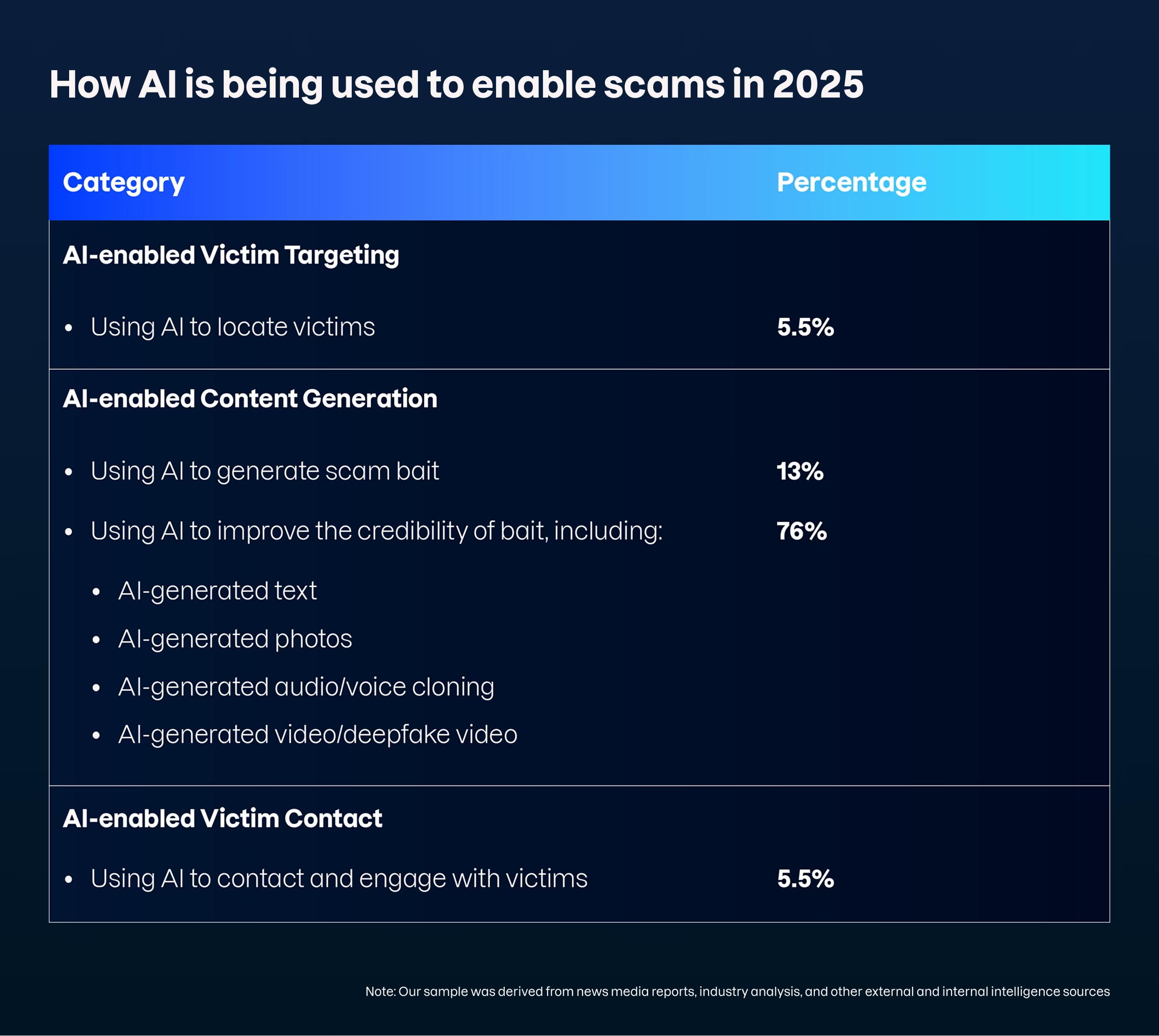

The report finds that 89% of AI-enhanced scams analyzed used artificial intelligence for content generation, most commonly to sharpen phishing emails or impersonate people through voice cloning and deepfake video tools.

“Voice cloning, for example, requires only a brief audio sample to build a replica of someone’s voice. This enables scammers to deliver emotionally charged messages that appear to come from relatives in crisis.”

AI video generators are also being weaponized to create fake celebrity endorsements for products and fraudulent investment schemes, tactics that exploit social proof and lure consumers into financial losses.

Laura Kankaala, F-Secure’s head of threat intelligence, warns that the line between legitimate automation and abuse is vanishing as AI agents gain autonomy.

“AI agents are designed to do more than answer questions—they can act on our behalf. That sounds promising for users, but also for scammers.”

F-Secure researchers describe the trend as a structural shift in online crime rather than a passing phase. They expect scammers to integrate AI deeper across the “scam kill chain,” using it for reconnaissance, conversation hijacking, and social engineering in real time.

Disclaimer: Opinions expressed at CapitalAI Daily are not investment advice. Investors should do their own due diligence before making any decisions involving securities, cryptocurrencies, or digital assets. Your transfers and trades are at your own risk, and any losses you may incur are your responsibility. CapitalAI Daily does not recommend the buying or selling of any assets, nor is CapitalAI Daily an investment advisor. See our Editorial Standards and Terms of Use.